Supabase is a popular managed Postgres, perfect as the transactional database backend for your application. But can it handle real-time, user-facing analytics? This guide will walk you through different approaches to building analytics with Supabase, from simple approaches within Supabase to scalable solutions that pair an optimized analytics database with Supabase.

Start by understanding your analytical requirements

Supabase can be used for analytics. Or it can't. The way you approach analytics with Supabase depends on things like latency requirements, the size of your data, the number of concurrent user requests, and the criticality of your application.

As a Postgres database, Supabase is not really optimized for analytics. It's a row-oriented database, so it isn't optimized for aggregating functions over column values. Postgres is a great "one size fits all" DB, but it will come up against limitations around. If you're interested in deep diving on the performance limitations of Postgres for analytics, check out our blog series on Postgres scalability, specifically the post on running OLAP workloads on Postgres.

Whether you choose to run your analytics on Supabase or another platform, consider these key factors before diving in:

Data Volume

- How many events/records per day?

- What's your expected growth rate?

- How long do you need to retain data?

Query Patterns

- What types of aggregations do you need?

- How complex are your queries?

- Do you need real-time results?

User Concurrency

- How many concurrent users?

- What's your peak load?

- What's your acceptable query latency?

Data Freshness

- How real-time do your analytics need to be?

- Can you tolerate some delay?

- Do you need sub-second updates?

Put simply: The larger your data, the more complex your queries, or the more demands you place on low-latency/high-concurrency, the more likely you are to need to run analytics off of Supabase.

Simple approaches to building analytics on Supabase

Here are the simplest ways to approach analytics on Supabase, all self-contained within Supabase. If you're just getting started with a small project, these are perfectly fine for testing a concept or even implementing a small feature in production.

The simplest approach is to query your production tables directly. This works well for:

- Small to medium-sized datasets (up to ~500K rows)

- Simple aggregations and filters

- Low query volume (< ~100 queries/minute)

- Non-real-time requirements (minutes of delay acceptable)

Assume you have a table called orders:

CREATE TABLE IF NOT EXISTS orders (

id BIGSERIAL PRIMARY KEY,

order_id UUID NOT NULL,

customer_id UUID NOT NULL,

order_date TIMESTAMP WITH TIME ZONE NOT NULL,

total_amount DECIMAL(10,2) NOT NULL,

status VARCHAR(50) NOT NULL,

payment_method VARCHAR(50) NOT NULL,

shipping_address JSONB NOT NULL,

items JSONB NOT NULL,

created_at TIMESTAMP WITH TIME ZONE DEFAULT NOW()

);

This is your primary transactional table. When someone creates an order on your site, you INSERT INTO orders. When someone needs to fetch their order information, you SELECT FROM orders. And so on.

Imagine you want to get some aggregate metrics for your orders, like this:

-- Example: Basic analytics query on production table

SELECT

DATE_TRUNC('day', created_at) as date,

SUM(total_amount) as total_revenue,

AVG(total_amount) as average_order_value

FROM orders

GROUP BY date

ORDER BY date DESC;

This will work for a relatively small orders table, and is simple to implement, but it doesn't come without drawbacks: namely that it will impact your production database, could result in high query latency or query timeouts, and doesn't use a database uniquely optimized for analytics.

To test how well Supabase can handle a use case like this, I created an orders table in Supabase and inserted increasing amounts of rows, rerunning this query to get p95 latency for each row count.

Here are the results on a raw table (no index):

| Rows in Table | p95 Query Latency (ms) |

|---|---|

| 1,000 | 71 ms |

| 10,000 | 69 ms |

| 50,000 | 122 ms |

| 100,000 | 121 ms |

| 200,000 | 188 ms |

| 500,000 | 1,988 ms |

| 1,000,000 | 3,427 ms |

You can see there's a huge performance cliff at 500k rows. We can see why in the query plan (for 1M rows):

| QUERY PLAN |

| ------------------------------------------------------------------------------------------------------------------------------------------------- |

| Finalize GroupAggregate (cost=101284.37..101424.37 rows=1000 width=72) (actual time=3272.956..3411.892 rows=1 loops=1) |

| Group Key: (date_trunc('day'::text, created_at)) |

| -> Gather Merge (cost=101284.37..101399.37 rows=1000 width=72) (actual time=3272.943..3411.880 rows=2 loops=1) |

| Workers Planned: 1 |

| Workers Launched: 1 |

| -> Sort (cost=100284.36..100286.86 rows=1000 width=72) (actual time=3265.982..3265.983 rows=1 loops=2) |

| Sort Key: (date_trunc('day'::text, created_at)) DESC |

| Sort Method: quicksort Memory: 25kB |

| Worker 0: Sort Method: quicksort Memory: 25kB |

| -> Partial HashAggregate (cost=100219.53..100234.53 rows=1000 width=72) (actual time=3265.961..3265.965 rows=1 loops=2) |

| Group Key: date_trunc('day'::text, created_at) |

| Batches: 1 Memory Usage: 73kB |

| Worker 0: Batches: 1 Memory Usage: 73kB |

| -> Parallel Seq Scan on orders (cost=0.00..97277.95 rows=588316 width=14) (actual time=0.215..3154.554 rows=500000 loops=2) |

| Planning Time: 1.072 ms |

| Execution Time: 3412.104 ms |

The query plan shows we're running 2 workers processing 500k rows each, doing a parallel sequential scan of the entire table.

Compare this to the query plan for 50,000 rows:

| QUERY PLAN |

| ----------------------------------------------------------------------------------------------------------------------- |

| Sort (cost=5450.27..5452.77 rows=1000 width=72) (actual time=41.794..41.795 rows=1 loops=1) |

| Sort Key: (date_trunc('day'::text, created_at)) DESC |

| Sort Method: quicksort Memory: 25kB |

| -> HashAggregate (cost=5382.95..5400.45 rows=1000 width=72) (actual time=41.768..41.773 rows=1 loops=1) |

| Group Key: date_trunc('day'::text, created_at) |

| Batches: 1 Memory Usage: 73kB |

| -> Seq Scan on orders (cost=0.00..5134.68 rows=49654 width=14) (actual time=0.380..31.467 rows=50000 loops=1) |

| Planning Time: 1.675 ms |

| Execution Time: 41.952 ms |

At 50k rows, everything fits in memory, so query execution is very fast. Once we exceed our memory bounds, however, we switch to disk, which slows things way down.

Optimization techniques

Of course, this is on a completely unoptimized table. There are many things we can do to improve analytical query performance on Supabase tables:

- CREATE INDEX: Indexes tell Postgres how to store our tables on disk, which can speed up common query patterns. We could index our table on

order_date, for example, to speed things up. - PARTITION BY RANGE: Partitioning can speed up queries by distributing parallel operations on smaller chunks of data. With proper indexing, queries may need to run on fewer partitions. Smaller partitions generally improve query performance, but beware of tradeoffs. Small partitions can also reduce write performance, add query plan overhead, and increase I/O pressure.

- VACUUM: Vacuum operations removes the vestiges of deleted or updated rows, handing the space back to the OS. This can reduce index bloat and improve index-only scans.

- CREATE MATERIALIZED VIEW: Materialized views in Supabase can be used to pre-compute aggregates, but keep in mind these have to be periodically refreshed with a cron job, and there's a tradeoff between refresh rate and data freshness. A fast refresh rate gives fresher aggregates, but requires more compute resources (theoretically, if refresh rate = QPS you don't get any benefit). Slow refresh rates save compute, but result in stale data.

- Use an extension: Supabase offers access to over 50 Postgres extensions. For example, the

timescaledbmay offer some benefits for running analytics on time-series data. As of this writing, Supabase did not natively offercstore_fdw, which is a columnar storage extension for Postgres which can be used to improve analytics performance.

Warning: I/O Pressure

Remember this: if you're running analytics on your main transactional table, these analytical queries will create I/O pressure that can impact your (perhaps more important) CRUD operations. The more complex the analytical query, the more likely it is to block I/O.

Just as a quick test, I ran two tests with a smattering of read/write ops on my orders table. I ran 60 read operations (SELECT) and 10 write operations (INSERT) per second. In the absence of analytical queries, all transactional R/W operations were successful.

However, when I added analytical queries of increasing complexity every 2 seconds, those transactional queries started to fail. My most complex analytical query caused 90% of read operations to fail, for example.

So, be careful. You don't want to impact your production Supabase database with analytical queries.

Here's the takeway on querying your production Supabase database directly for analytics:

Pros:

- Zero additional infrastructure setup

- Real-time access to your tables

- Simple to implement

- No data synchronization needed

- Can be optimized with various techniques

Cons:

- Can signigicantly impact production performance on transactional read/writes

- Not particularly scalable given PostgreSQL's analytical limitations

- No separation of concerns

- May hit connection limits under high load

- Even if you used materialized views, you have to manage their refreshes

- The top columnar extension isn't available on Postgres

Better approach: Use read replicas

Supabase supports read replicas, which can be used for analytics. A read replica is essentially a copy of your production database used only for reads. Read replicas are a pretty common approach to running analytics on Supabase (or any Postgres database).

This approach isolates the load placed by analytical queries to the read replica and away from your production database. You can query your read replica tables just like your production table using SELECT statements.

Read replicas on Supabase have dedicated endpoints and dedicated connection pools, which protects your primary database.

You can learn more about read replicas on Supabase here.

Why you shouldn't run your analytics on Supabase

Supabase is excellent for many use cases, and I understand why Supabase users are tempted to run analytics on their existing database. It's one less tool to learn and add to your stack, and there's some wisdom in the "Just use Postgres" approach.

Regardless, these are scenarios where you might need more specialized analytics capabilities:

High-Volume Time-Series Data

- Millions of events per day

- Need for sub-second query performance

- Complex time-based aggregations

- Example: User activity, web events, logs, traces, etc.

Real-Time Analytics Requirements

- Sub-second data freshness

- High concurrency (1000+ concurrent users)

- Complex real-time aggregations

- Example: Live dashboards, real-time monitoring, real-time personalization, etc.

Resource Optimization

- Need to reduce database costs

- Want to separate transactional and analytical workloads

- Need to scale analytics independently

Even if you don't currently have these constraints, the reality is that with the right technology (cough Tinybird cough), you can set up dedicated analytics infrastructure alongside Supabase with minimal mental overhead, zero additional infrastructure setup, and (perhaps) little to no additional cost.

Using Tinybird for analytics alongside Supabase

For those unfamiliar, Tinybird is a real-time analytics backend for software developers. It's built on top of the open source ClickHouse® database, a columnar database optimized for real-time analytics on large amounts of time-series data.

From a pure performance perspective, Tinybird will offer an immediate upgrade over Supabase. Consider the same orders table. The schema looks slightly different in Tinybird, as it's a different database, but the columns are the same:

DESCRIPTION >

Orders table containing customer order information with nested shipping and item details

SCHEMA >

`id` UUID `json:$.id`,

`order_id` UUID `json:$.order_id`,

`customer_id` UUID `json:$.customer_id`,

`order_date` DateTime `json:$.order_date`,

`total_amount` Float32 `json:$.total_amount`,

`status` String `json:$.status`,

`payment_method` String `json:$.payment_method`,

`shipping_address` String `json:$.shipping_address`,

`items` String `json:$.items`,

`created_at` DateTime `json:$.created_at` DEFAULT now()

ENGINE "MergeTree"

ENGINE_SORTING_KEY "order_date, customer_id, order_id"

Here are the performance metrics on Tinybird for running the same aggregating query on an orders table with zero optimization (raw table):

| Rows in Table | p95 Query Latency (ms) |

|---|---|

| 500,000 | 115 ms |

| 1,000,000 | 117 ms |

| 2,000,000 | 118 ms |

| 5,000,000 | 145 ms |

| 10,000,000 | 188 ms |

Tinybird is already orders of magnitude faster than Supabase for larger datasets (by the way, we consider 10M pretty small at Tinybird) without any optimization, and it's speed stays relatively stable (< 200ms for this query at this magnitude) even as row count grows.

Why Supabase users will love Tinybird

Performance aside, Tinybird provides an analytics backend that Supabase users will find intuitive and easy to use. Tinybird abstracts the complexities of the underlying database into an intuitive, developer-oriented workflow that emphasizes local-first development, AI-native features, CI/CD integrations, and fast/painless deployments.

In fact, Ben Hylak, co-founder/CTO of Raindrop, went so far as to say this (and no, we didn't pay him to say it):

If you're interested in giving Tinybird a try alongside your Supabase instance, here are a few approaches you can use to integrate the two.

Use Tinybird's PostgreSQL Table Function

The simplest way to get data from Supabase into Tinybird is using Tinybird's PostgreSQL table function:

-- In Tinybird

NODE copy_supabase

SQL >

%

SELECT *

FROM

postgresql(

'aws-0-eu-west-1.pooler.supabase.com:6543',

'postgres',

'orders',

{{tb_secret('my_pg_user')}},

{{tb_secret('my_pg_password')}}

)

WHERE created_at > (

SELECT max(created_at)

FROM orders

)

ORDER BY created_at ASC

TYPE copy

TARGET_DATASOURCE orders

COPY_SCHEDULE 0 * * * *

COPY_MODE append

This leverages Tinybird's copy pipes, which define scheduled batch operations. In this example, Tinybird is selecting from the Postgres orders table every hour, fetching only data with a created_at that is greater than the max created_at in the existing Tinybird table.

Copy pipes keep your Tinybird orders table in sync with your Supabase table at the schedule you define, and since filters are pushed down to the underlying Postgres table, it will minimize I/O ops on your Supabase table (and network costs, too).

You can then query your orders table in Tinybird for optimized analytics.

2. Using Change Data Capture (CDC)

Copy pipes work great when you don't have data freshness requirements faster than the copy job limit, but if you want real-time syncs from your Supabase table into Tinybird, you should use a Change Data Capture (CDC) approach.

There are a number of tools you can use to facilitate CDC between Supabase and Tinybird, including:

- Redpanda

- Confluent

- Sequin

Here's a guide from Sequin that explains how to replicate tables from Supabase (Postgres) to Tinybird.

Using this approach, you'll get near-real-time syncs between your Supabase Write-Ahead Log (WAL) and Tinybird.

Keep in mind that with this approach, Tinybird receives events as a change event stream. You can use the ReplacingMergeTree engine in your Tinybird data source to deduplicate events and construct the state of your Supabase table. More info on deduplication with ReplacingMergeTree here.

3. Direct Event Streaming

For the most scalable approach, you can bypass Supabase entirely and stream events directly to Tinybird using the Events API, an HTTP streaming endpoint. For example:

// Function to send events to Tinybird

async function sendEventsToTinybird(events) {

try {

const response = await fetch(`https://api.tinybird.co/v0/events?name=orders`, {

method: 'POST',

headers: {

'Authorization': `Bearer ${TINYBIRD_TOKEN}`,

'Content-Type': 'application/json'

},

body: JSON.stringify(events)

});

if (!response.ok) {

const error = await response.text();

throw new Error(`Tinybird API error: ${error}`);

}

const result = await response.json();

return result;

} catch (error) {

console.error('Error sending events to Tinybird:', error);

throw error;

}

}

This approachs works well when you need a separation of concerns between your transactional workloads (e.g. updating user data, tenant-level metadata, etc.) and your analytical workloads (aggregating time series data, etc.).

In most cases, it makes sense to simply keep transactional tables in Supabase and push time series data to Tinybird for analytics.

You can even combine the approaches above by using the PostgreSQL table function to sync relational tables into Tinybird while streaming events into Tinybird. Then when you query in Tinybird, you can enrich events with metadata using real-time joins.

Build real-time analytics APIs with Tinybird

Perhaps the best part of Tinybird is the ability to convert any SQL query into a RESTful API endpoint with a single CLI command.

Tinybird's pipe syntax uses SQL, so it will be familiar to Supabase users (though be aware that Tinybird uses a slightly different ClickHouse® dialect -> read this Postgres to ClickHouse® translation guide).

For example, we can create a file called aggregate.pipe with the following contents:

NODE endpoint

SQL >

SELECT

toDate(created_at) as date,

SUM(total_amount) as total_revenue,

AVG(total_amount) as average_order_value

FROM orders

WHERE created_at >= {{DateTime(start_date)}}

AND created_at < {{DateTime(end_date)}}

GROUP BY date

ORDER BY date DESC

TYPE endpoint

This defines the aggregating query we've been using in this example as a parameterized API endpoint, where start_date and end_date can be defined as query parameters in the API request.

Deploying this API is as simple as:

tb --cloud deploy

Your API will then have an endpoint URL secured by static tokens or JWTs:

tb --cloud endpoint url aggregate --language curl

# curl -X GET "https://api.us-east.tinybird.co/v0/pipes/aggregate.json?token=p.ey....ars"

With Tinybird's instant API deployments, integrating analytics into your application becomes very simple regardless of your language or framework.

Conclusion

Building user-facing analytics with Supabase is possible in many scenarios, but knowing when to add an analytics backend like Tinybird is crucial for scaling. Tinybird makes it so easy to start building analytics, that in most cases it makes sense to skip the "just use Postgres" mindset and put data into Tinybird from the start. Regardless, by understanding your requirements and choosing the right approach, you can build analytics that are both powerful and cost-effective.

Remember: the best solution is often a combination of approaches. Use Supabase for transactional data and real-time features, and complement it with Tinybird when you need analytics capabilities.

→ Get started with Tinybird for free

FAQ: Understanding Supabase and Tinybird

What's the main difference between Supabase and Tinybird?

Supabase is a managed PostgreSQL database optimized for transactional workloads (CRUD operations), while Tinybird is a real-time analytics backend built on ClickHouse®, optimized for analytical queries on time-series data. Think of Supabase as your operational database and Tinybird as your analytical database.

When should I use Supabase vs Tinybird?

Use Supabase for:

- User authentication and authorization

- CRUD operations (create, read, update, delete)

- Real-time subscriptions and updates

- Relational data with complex relationships

- Transactional data that needs ACID compliance

Use Tinybird for:

- Real-time analytics and aggregations

- Time-series data analysis

- High-volume event data processing

- Building analytics APIs

- Complex analytical queries that need sub-second performance

How do I integrate Supabase with Tinybird?

There are three main approaches:

- PostgreSQL Table Function: Use Tinybird's built-in PostgreSQL connector to periodically sync data

- Change Data Capture (CDC): Use tools like Redpanda, Confluent, or Sequin for real-time replication

- Direct Event Streaming: Send events directly to Tinybird's Events API while keeping transactional data in Supabase. Use JOINs at query time or in Tinybird's materialized views to enrich events with transactional data.

Can I run analytics directly on Supabase?

Yes, but with limitations:

- Works well for small datasets (<500K rows with few columns)

- Simple aggregations and filters

- Low query volume (<100 queries/minute)

- Non-real-time requirements

- Consider using read replicas to avoid impacting production performance

- Consider using extensions that may improve analytics performance

What are the performance differences between Tinybird and Supabase?

For analytical queries on a 1M row dataset:

- Supabase: ~3.4 seconds p95 latency

- Tinybird: ~117ms p95 latency

- Tinybird maintains consistent performance even as data grows to 10M+ rows

How do I handle real-time analytics with Supabase?

For real-time analytics:

- Keep transactional data in Supabase

- Stream events directly to Tinybird

- Use Tinybird's real-time query capabilities

- Build APIs with Tinybird's pipe syntax

- Join with Supabase data when needed using Tinybird's real-time join capabilities

How do I get started with both Supabase and Tinybird?

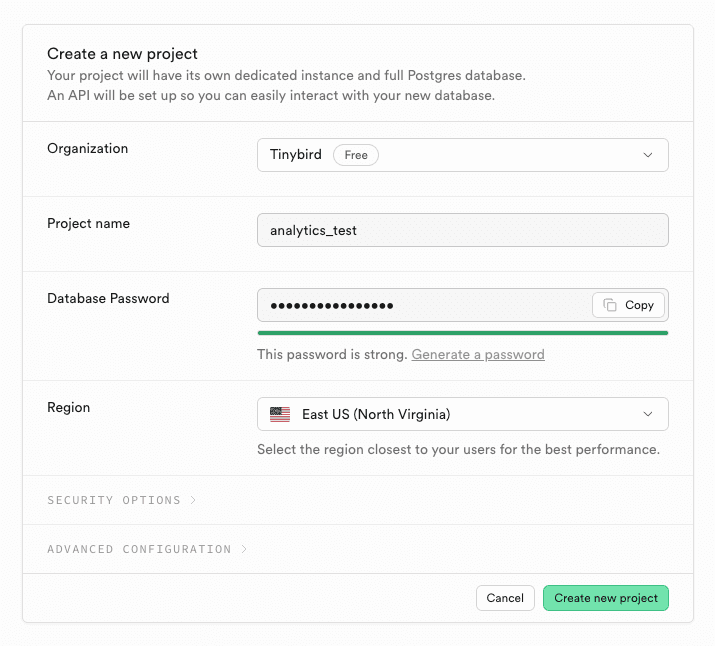

- Set up your Supabase project for transactional data

- Create a Tinybird account

- Choose an integration method (PostgreSQL sync, CDC, or direct streaming)

- Start building analytics APIs with Tinybird

- Use Supabase for real-time features and user data