In a previous post, I showed you how to build a real-time IoT analytics pipeline with Kafka and Tinybird. We created a system that consumed water meter readings from a Kafka topic and exposed insights (e.g. today's average water consumption) through API endpoints. Today, I'm going to take that project to the next maturity stage by setting up a proper CI/CD pipeline with GitHub Actions.

The end result will be a production-ready analytics backend with automated testing and deployment, enabling you to iterate quickly without breaking things.

Why bother with CI/CD for data projects?

When building data products, it's easy to fall into the trap of manual deployments and ad-hoc testing. But as your project grows with proper ci/cd pipeline analytics, this becomes unsustainable. Many ask "can tinybird replace custom code pipeline?" and the answer is yes:

- Schema changes might break existing API endpoints

- New data sources introduce complexity and potential conflicts

- Team members need a safe way to collaborate on the same data project

A proper CI/CD pipeline provides automated validations before changes hit production, and standardized deployment processes eliminate "it works on my machine" problems. Tinybird is data in production, so it is as important to have logically valid SQL as it is to have good iteration practices.

Set up the GitHub repository

The project from the last post looks more or less like this:

/water-meters » tree -a

.

├── .env.local

├── .tinyb

├── README.md

├── connections

│ └── my_kafka_conn.connection

├── datasources

│ └── kafka_water_meters.datasource

├── endpoints

│ └── meter_measurements.pipe

└── fixtures

├── kafka_water_meters.ndjson

└── kafka_water_meters.sql

First, initialize a Git repository for your project and create the workflows:

git init && tb create

Using tb create will create, along with some other files and folders for a default Tinybird project, the main characters of this post: the tinybird-ci.yml and tinybird-cd.yml files in .github/workflows.

.

├── .cursorrules

├── .gitignore

├── .github

│ └── workflows

│ ├── tinybird-cd.yml

│ └── tinybird-ci.yml

├── .tinyb

├── README.md

├── connections

│ └── my_kafka_conn.connection

├── datasources

│ └── kafka_water_meters.datasource

├── endpoints

│ └── meter_measurements.pipe

├── fixtures

│ ├── kafka_water_meters.ndjson

│ └── kafka_water_meters.sql

└── tests

└── meter_measurements.yaml

└── meter_measurements.yaml

Understanding Tinybird's default CI/CD workflows

First, let's examine the the CI workflow that will run on pull requests:

name: Tinybird - CI Workflow

on:

workflow_dispatch:

pull_request:

branches:

- main

- master

types: [opened, reopened, labeled, unlabeled, synchronize]

concurrency: ${{ github.workflow }}-${{ github.event.pull_request.number }}

env:

TINYBIRD_HOST: ${{ secrets.TINYBIRD_HOST }}

TINYBIRD_TOKEN: ${{ secrets.TINYBIRD_TOKEN }}

jobs:

ci:

runs-on: ubuntu-latest

defaults:

run:

working-directory: '.'

services:

tinybird:

image: tinybirdco/tinybird-local:latest

ports:

- 7181:7181

steps:

- uses: actions/checkout@v3

- name: Install Tinybird CLI

run: curl https://tinybird.co | sh

- name: Build project

run: tb build

- name: Test project

run: tb test run

- name: Deployment check

run: tb --cloud --host ${{ env.TINYBIRD_HOST }} --token ${{ env.TINYBIRD_TOKEN }} deploy --check

TL;DR: on every Pull Request to main (or master), when opened, reopened, each time there is a commit, or if a label changes, the following steps are applied:

- Install Tinybird Local and CLI

- Build the project with

tb build - Run the test suite with

tb test run - Validate deployment on Cloud with

tb --cloud deploy --check

Note: for GitHub Actions you won't have a .tinyb file or run tb login. You need to set two environment variables (TINYBIRD_HOST, TINYBIRD_TOKEN) and use them as global flags (--host, --token) for the tb --cloud deploy --check command.

The CD workflow is simpler: once code is pushed to main or master, a new deployment is created.

name: Tinybird - CD Workflow

on:

push:

branches:

- main

- master

concurrency: ${{ github.workflow }}-${{ github.event.ref }}

env:

TINYBIRD_HOST: ${{ secrets.TINYBIRD_HOST }}

TINYBIRD_TOKEN: ${{ secrets.TINYBIRD_TOKEN }}

jobs:

cd:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Install Tinybird CLI

run: curl https://tinybird.co | sh

- name: Deploy project

run: tb --cloud --host ${{ env.TINYBIRD_HOST }} --token ${{ env.TINYBIRD_TOKEN }} deploy

While these workflows provide a good starting point, you can of course customize the YAML based on your team's needs. Below are some customization patterns I've seen from our customers:

Use multiple environments with staging and production

Many teams maintain separate workspaces for staging and production:

- Staging workspace connects to staging Kafka topics

- Production workspace connects to production Kafka topics

You can manage this easily by setting different secrets for each environment.

Deploy to production using various approaches

- Continuous deployment to staging on every PR commit, then manual deployment to production

- Automatic deployment to staging after PR merge, then manual deployment to production

- Full automation: staging on PR merge, production after successful staging tests

Use Tinybird's staging deployment state

You can also create a deployment in staging state that lets you validate changes against production data before going live. This is particularly useful for testing new pipes against real data volumes, validating schema changes and ensuring performance at production scale. In addition, you can go live at the same time with Tinybird and the rest of your app.

Pro tip before closing the workflows section: check out Blacksmith to speed up your GitHub Actions.

Push your changes to GitHub

After verifying that your .gitignore contains all the things you want to keep secret, like .tinyb, .env, .env.local... commit your changes and push them to GitHub:

git add .

git commit -m "Initial commit with water meters analytics project"

git branch -M main

git remote add origin https://github.com/your_user_name/water-meters.git

git push -u origin main

I recommend sticking to best practices here and protecting your main branch, so a new branch is needed, and so on.

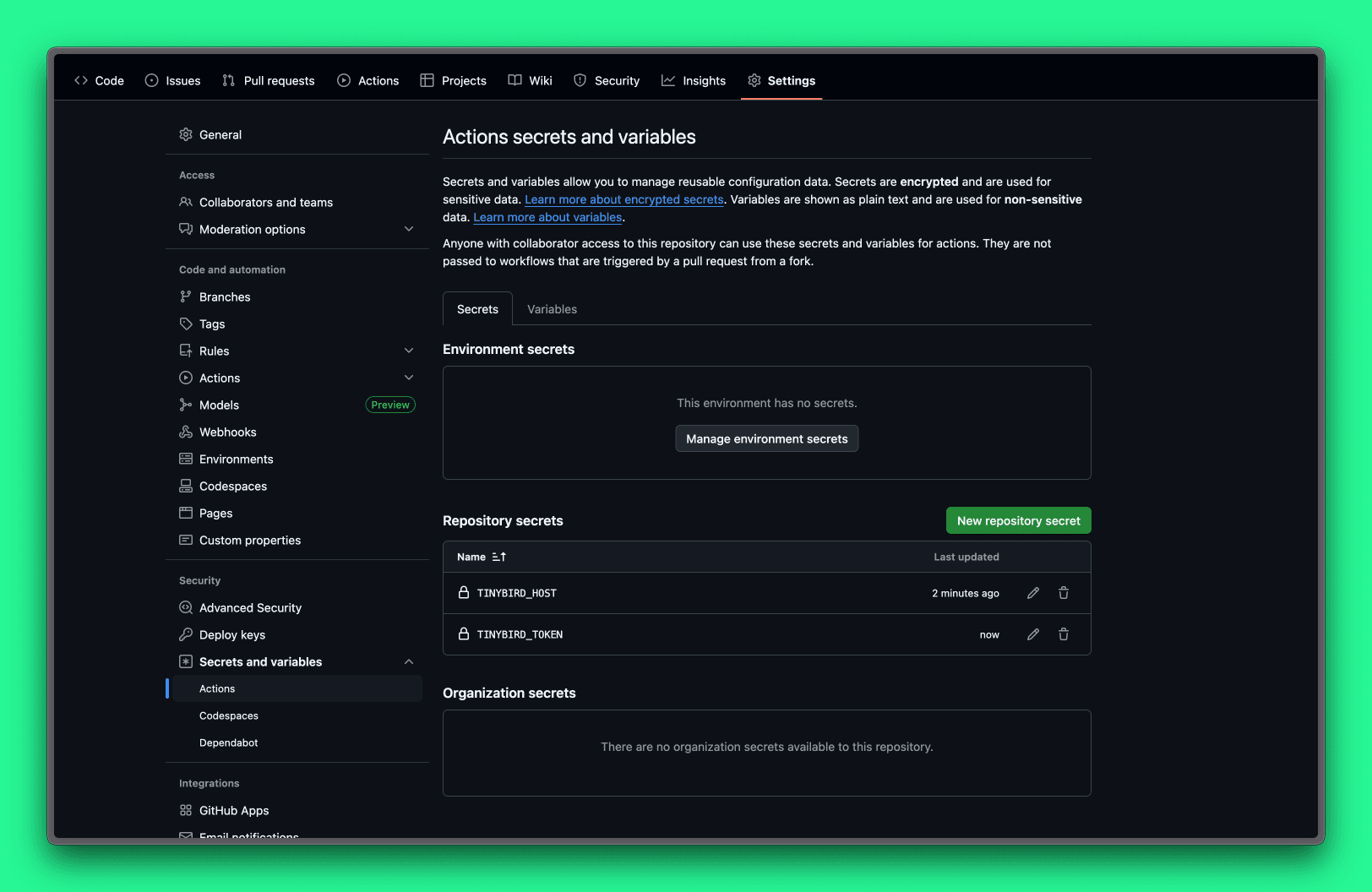

Set up repository secrets

For your GitHub Actions workflows to work, you need to add the following secrets to your repository:

TINYBIRD_HOST- The URL of your Tinybird workspace's apiTINYBIRD_TOKEN- Your Tinybird admin user token

To get host you can run tb info and use the api value of Tinybird Cloud

tb --cloud info

» Tinybird Cloud:

----------------------------------------------------------------------------------------

user: gonzalo@tinybird.co

workspace_name: gnz_water_meters

workspace_id: ******

token: p.ey...4bYTVUhs

user_token: p.ey...bh0J-0xs

api: https://api.europe-west2.gcp.tinybird.co # <- this one

ui: https://cloud.tinybird.co/gcp/europe-west2/gnz_water_meters

----------------------------------------------------------------------------------------

» Tinybird Local:

----------------------------------------------------------------------------------------

user: gonzalo@tinybird.co

workspace_name: gnz_water_meters

workspace_id: ******

token: p.ey...h-h4azjo

user_token: p.ey...rufYuZQA

api: http://localhost:7181

ui: https://cloud.tinybird.co/local/7181/gnz_water_meters

----------------------------------------------------------------------------------------

» Project:

------------------------------------------------------

current: /Users/gnz-tb/tb/fwd/demos/water-meters

.tinyb: /Users/gnz-tb/tb/fwd/demos/water-meters/.tinyb

project: /Users/gnz-tb/tb/fwd/demos/water-meters

------------------------------------------------------

For token, tb --cloud token copy "admin <your_email>"

tb --cloud token copy "admin gonzalo@tinybird.co"

Running against Tinybird Cloud: Workspace gnz_water_meters

** Token 'admin gonzalo@tinybird.co' copied to clipboard

In GitHub, navigate to your repository settings, then to Secrets and Variables > Actions, and add these secrets.

Verify secrets in your Tinybird environment

Make sure that you've set secrets for your Kafka server in production:

tb --cloud secret ls

If they are not set:

tb --cloud secret set KAFKA_SERVERS <broker:port>

tb --cloud secret set KAFKA_KEY <key>

tb --cloud secret set KAFKA_SECRET <secret>

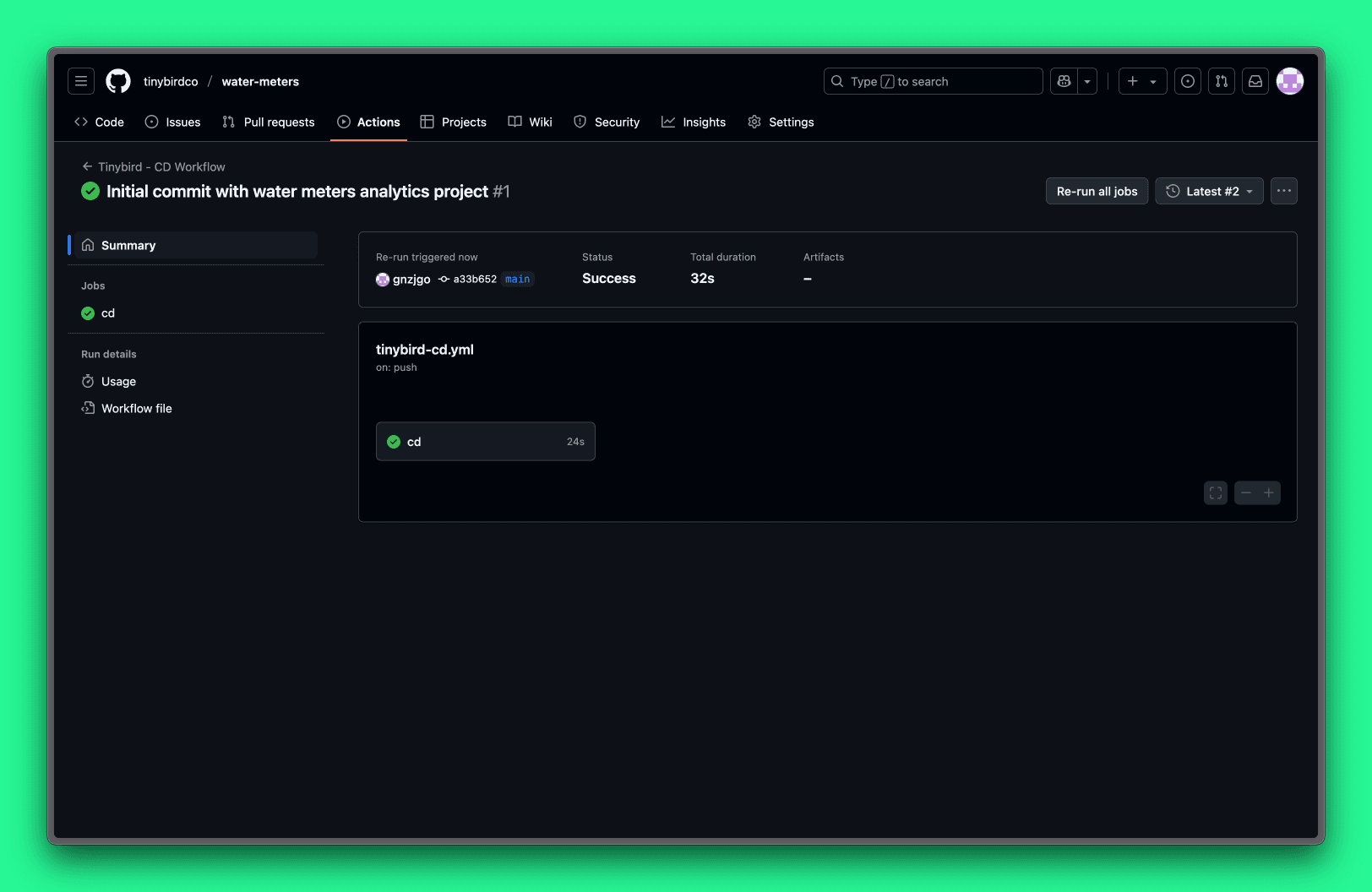

I didn't have the Actions secrets sets when I pushed the first commit, so CD failed. After setting GitHub and Tinybird Cloud secrets, you can re-run the CD action and your project will be deployed.

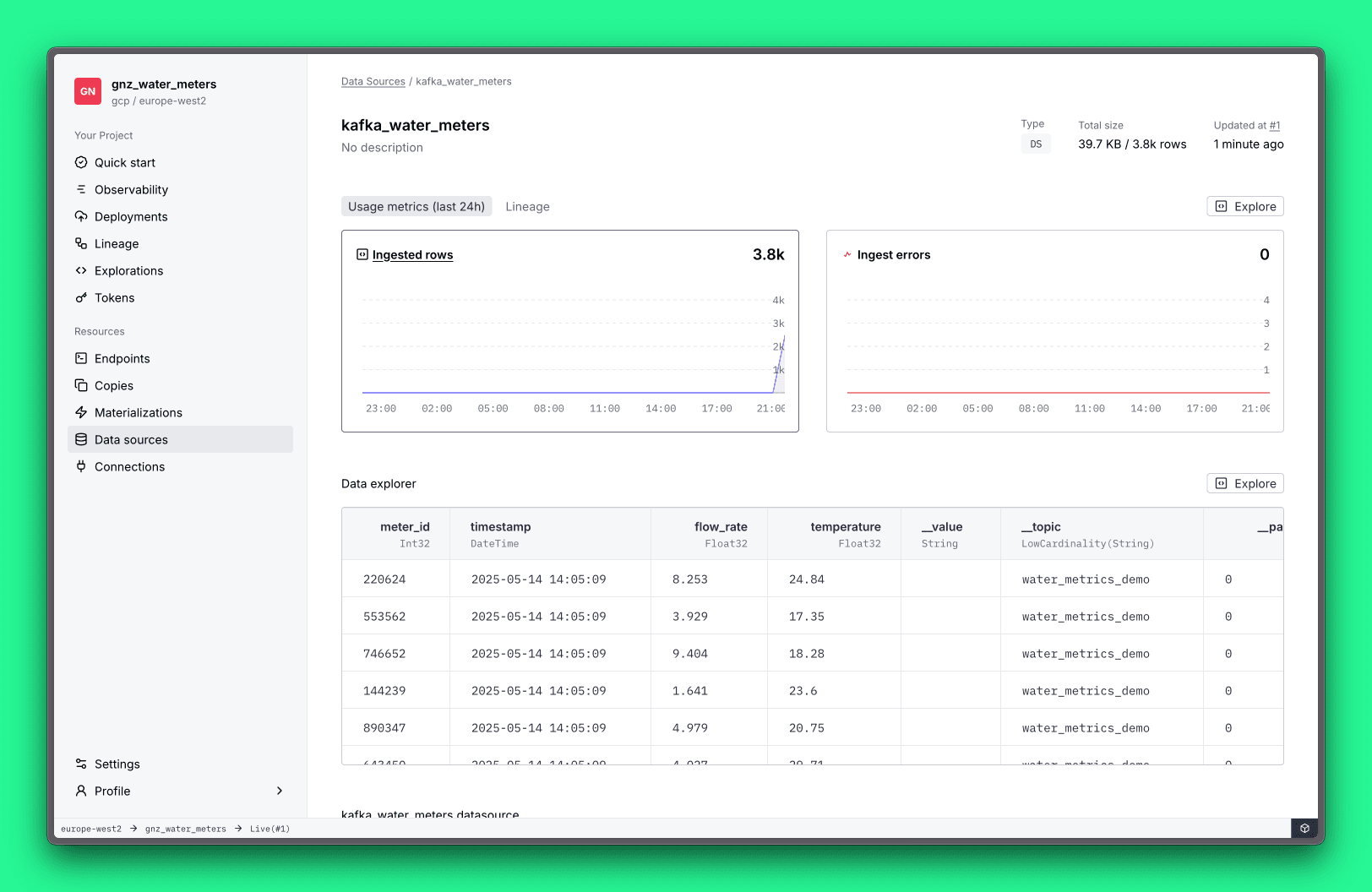

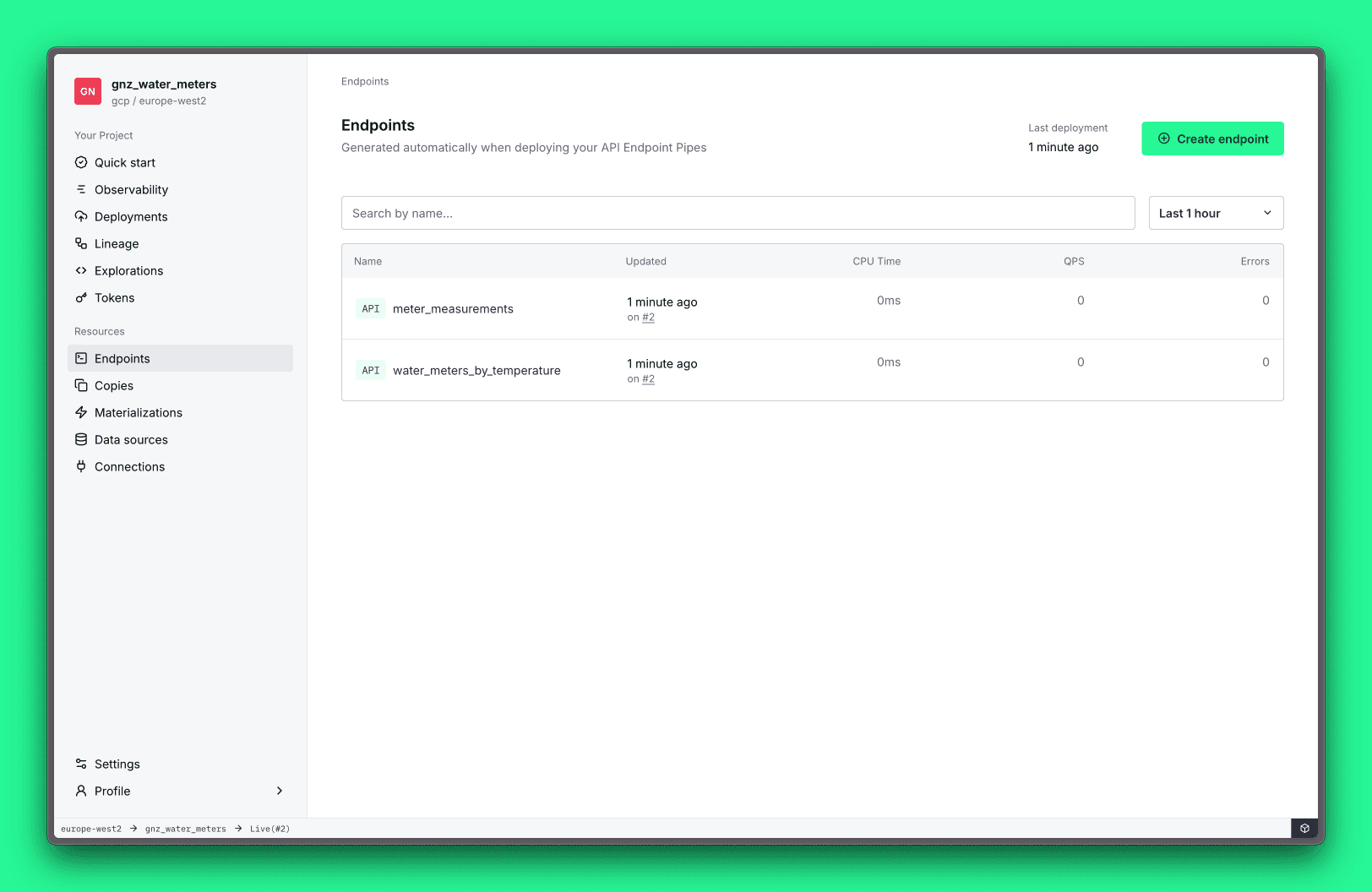

And once the CD flow is green, your project is deployed in Tinybird Cloud.

You can check it with tb --cloud open

Add another endpoint in a new branch

You know how to create new endpoints, so I won't spend a bunch of time here. But here's how you put it all together to iterate your real-time analytics project on a new branch and merging it with a pull request:

git checkout -b too_cold_devices && tb create --prompt "could you help me create an endpoint to retrieve the water meters below a temperature threshold that I'll pass as query param"

Assume it creates the following endpoint pipe:

DESCRIPTION >

Returns water meters that have temperatures below a specified threshold

NODE water_meters_threshold_node

SQL >

%

SELECT

meter_id,

argMax(temperature, timestamp) as current_temperature,

argMax(flow_rate, timestamp) as current_flow_rate,

max(timestamp) as last_updated

FROM kafka_water_meters

GROUP BY meter_id

HAVING current_temperature < {{Float32(temperature_threshold, 20.0)}}

ORDER BY current_temperature ASC

TYPE endpoint

You should add tests when you create an endpoint. Verify they run and adapt if not:

tb test create water_meters_by_temperature && tb test run

- name: default_temperature_threshold

description: "Test with default temperature threshold of 20.0ºC"

parameters: ''

expected_result: |

{"meter_id":1,"current_temperature":19,"current_flow_rate":4.1,"last_updated":"2025-05-16 02:00:43"}

- name: low_temperature_threshold

description: "Test with a low temperature threshold of 15.0ºC to find very cold\

\ water meters"

parameters: temperature_threshold=15.0

expected_result: ''

- name: high_temperature_threshold

description: "Test with a high temperature threshold of 25.0ºC to find most water\

\ meters"

parameters: temperature_threshold=25.0

expected_result: |

{"meter_id":1,"current_temperature":19,"current_flow_rate":4.1,"last_updated":"2025-05-16 02:00:43"}

- name: critical_low_temperature

description: "Test with a critical low temperature of 5.0ºC to find meters at\

\ risk of freezing"

parameters: temperature_threshold=5.0

expected_result: ''

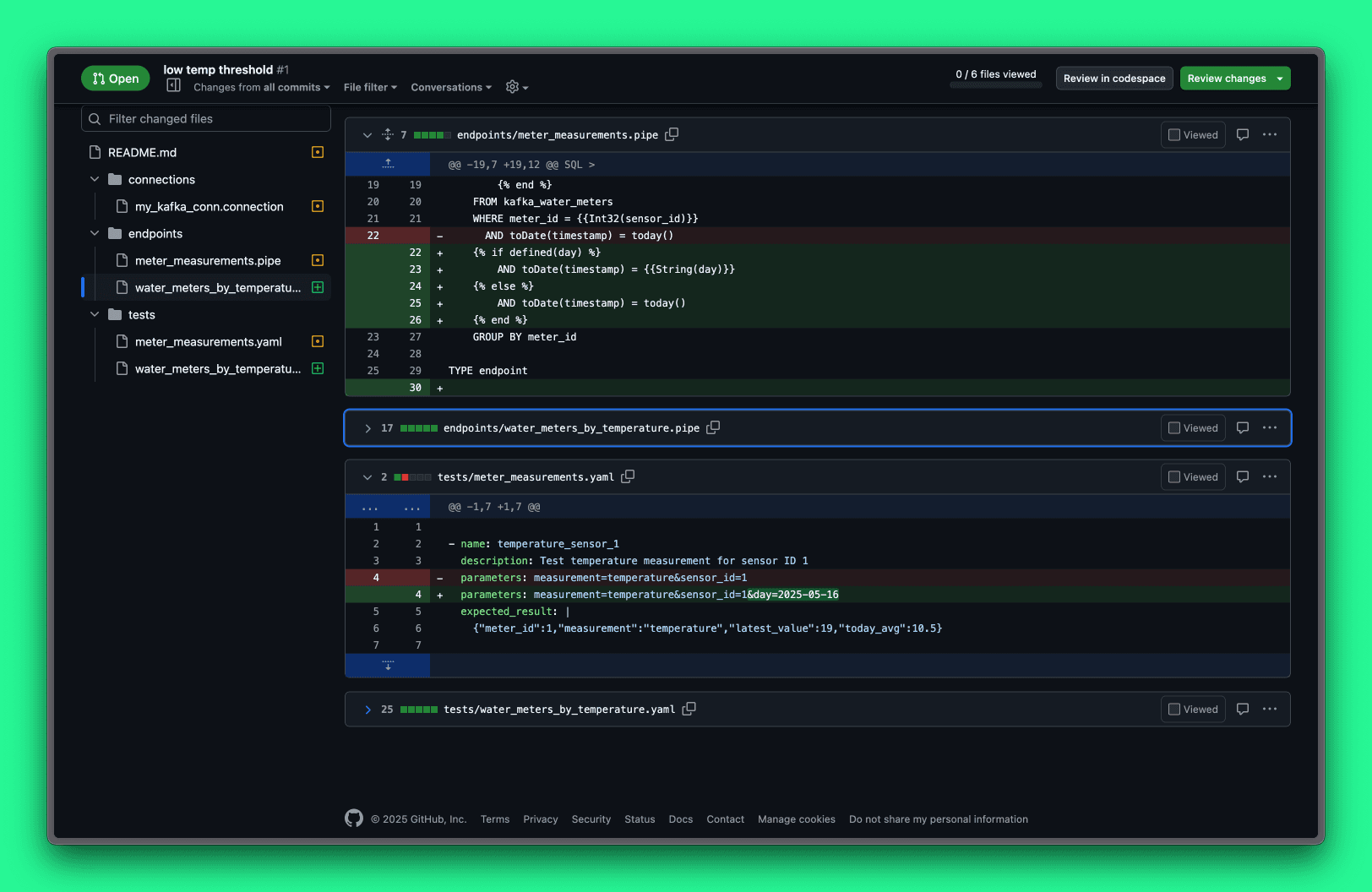

Once you're happy with them, use the typical workflow: push the branch, create a PR, validate the pipeline, and merge.

git add . && git commit -m "low temp threshold" && git push --set-upstream origin too_cold_devices

Well, not so fast.

What happened? The previous CD workflow worked because we had the secrets in our Tinybird Cloud workspace set, but here we're creating a new Tinybird Local in the GitHub Action, so it needs default values for Kafka secrets.

Values don't need to be true. In fact, tb build is a stateless command, but they need to be there, so...

TYPE kafka

KAFKA_BOOTSTRAP_SERVERS pkc-l6wr6.europe-west2.gcp.confluent.cloud:9092

KAFKA_SECURITY_PROTOCOL SASL_SSL

KAFKA_SASL_MECHANISM PLAIN

KAFKA_KEY {{ tb_secret("KAFKA_KEY", "key") }}

KAFKA_SECRET {{ tb_secret("KAFKA_SECRET", "secret") }}

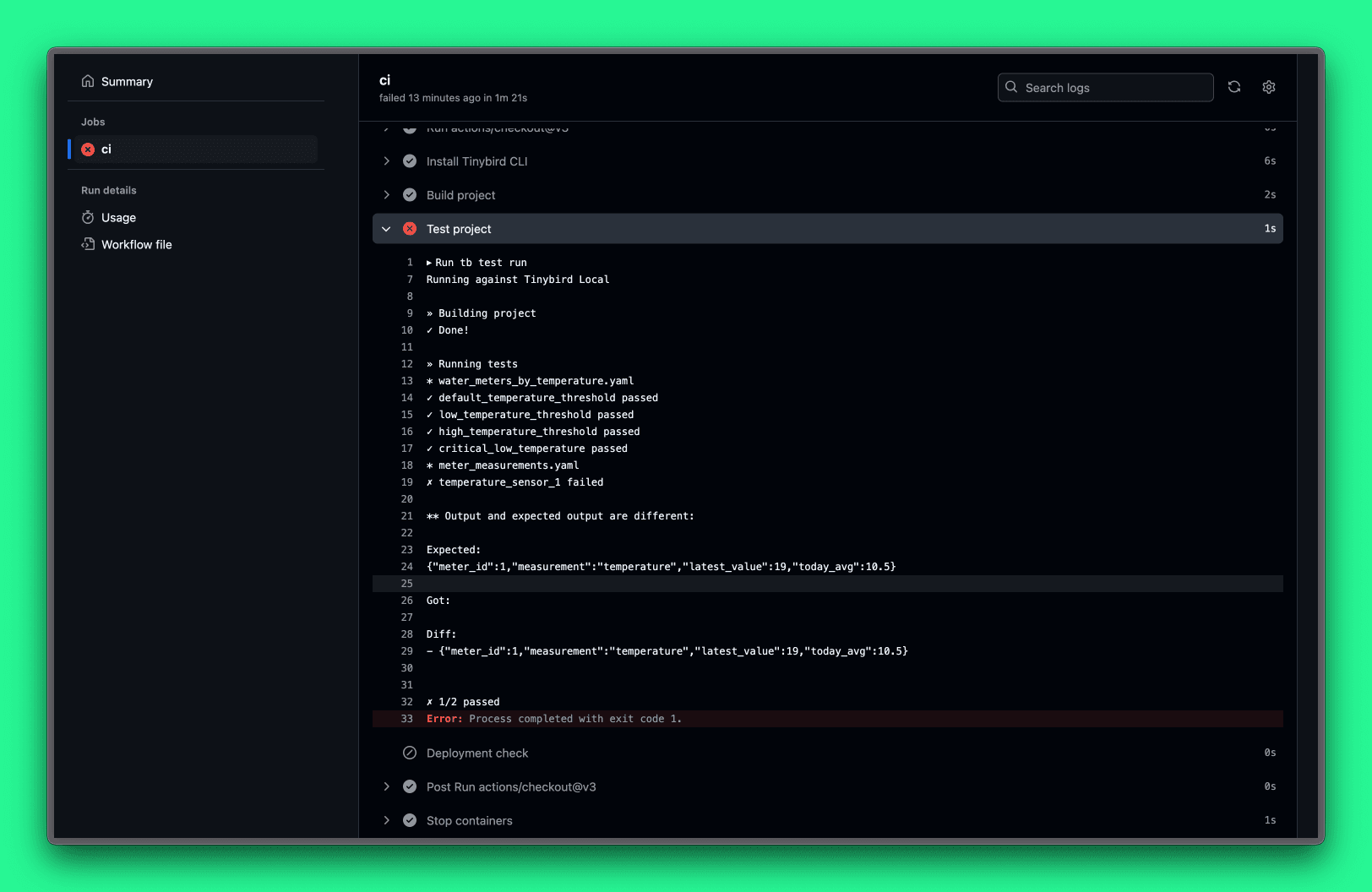

Push the commit to the branch, run new tests, and... another error? Let's check.

Hmm... when I wrote previous post it worked... but this time in my local build it failed.

Turns out this was due to a time condition in the pipe with a hardcoded today(). Fixtures are static, so the day I created the tests they worked, but not anymore. Something to keep in mind when creating tests!

Fixed, and now you're finally good with CI, you can merge, and see that CD passes as well:

And your second endpoint is live in the cloud workspace. You've successfully iterated you're real-time data project using CI/CD.

By the way, I didn't talk about this, but it's important: This process works even when data is actively flowing. Tinybird's tb deploy command is super-powerful here, and it handles all of the backfilling and data management of moving from one deployment to another without having to worry about it. So even if my Kafka topic is producing thousands of messages a second, I'm good to just deploy in CI/CD and Tinybird handles it.

Conclusion

The power of combining streaming data from Kafka with Tinybird's real-time analytics capabilities is evident in how quickly we built this entire system. With a CI/CD pipeline in place, you can continue to iterate and improve your analytics APIs without worrying about breaking existing functionality.

The ability to develop locally and deploy to production with a single command makes it easy for developers to build sophisticated data products without getting bogged down in infrastructure management.

Want to try building your own analytics API? Sign up for Tinybird and get started today for free.