In a previous blog post I shared how to build real-time analytics applications on top of Iceberg using Tinybird's copy pipes, materialized views, and API endpoints.

There's an alternative architecture, however, that combines the best of event streaming with durable data storage and real-time analytics to create powerful analytical applications by using redpanda iceberg topics with:

- Redpanda as a modern, efficient Kafka API-compatible event streaming platform with redpanda iceberg support

- Apache Iceberg as a durable, versioned table format for your data lake

- Tinybird for seamless high-performance analytics APIs and developer experience

You get the benefits of event sourcing (complete audit trail, replay capability, event-driven processing) with the durability and query capabilities of a data lake, plus the speed of real-time analytics .

Why this architecture makes sense

Traditional analytics architectures present several problems:

- Data warehouses are too slow for real-time use cases

- Directly querying Kafka doesn't retain history and is difficult to backfill

- Traditional data lakes lack real-time capabilities

- Building your own analytics APIs is time-consuming

This architecture offers the following benefits:

- Complete Event History: Redpanda stores all events and can stream them to Iceberg

- Durable Storage: Iceberg provides a reliable, versioned data lake with schema evolution

- Real-time + Historical Analysis: Tinybird can process both historical data (from Iceberg) and real-time data (from Redpanda)

- Low-latency APIs: Tinybird generates optimized APIs for your applications

- Scalability: All components scale horizontally

- Developer-friendly: Simple local setup for development, with cloud deployment options

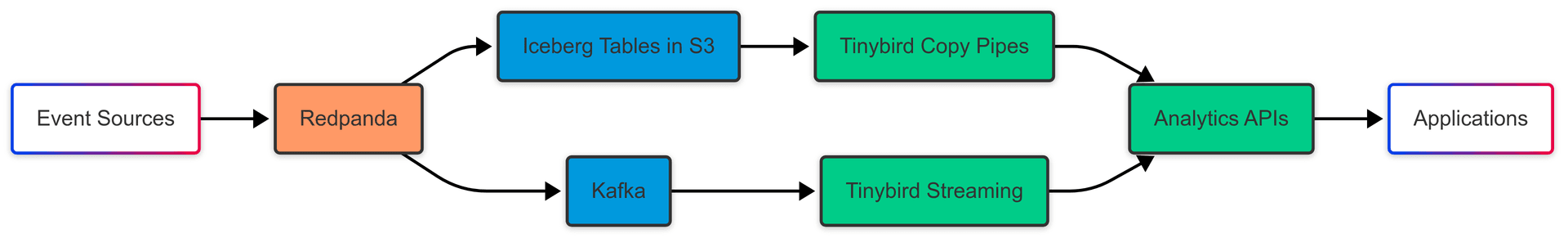

Architecture Overview

- Events are published to Redpanda topics

- Redpanda's Iceberg connector streams events to Iceberg tables in S3

- Tinybird uses copy pipes to load historical data from Iceberg

- Tinybird connects directly to Redpanda for real-time streaming

- Tinybird combines historical and real-time data for analytics

- APIs published by Tinybird serve applications with millisecond latency

Practical Implementation

Setting Up Redpanda Locally

Follow along this Redpanda lab to set up Redpanda locally with Iceberg.

Instead of minio we'll use our own S3 bucket at s3://redpanda-iceberg. You can find the configuration in this gist.

Configure a topic with Iceberg support. Test that you can send data, and that it's written in the S3 bucket in s3://redpanda-iceberg/redpanda/key_value/. For simplicity for this example we use a schemaless topic, but you should use schema registry:

# Create a topic

rpk topic create key_value --topic-config=redpanda.iceberg.mode=key_value

# produce some data

echo 'key {"user_id":2324,"event_type":"BUTTON_CLICK","ts":"2024-11-25T20:23:59.380Z"}\n{"user_id":3333,"event_type":"SCROLL","ts":"2024-11-25T20:24:14.774Z"}\n{"user_id":7272,"event_type":"BUTTON_CLICK","ts":"2024-11-25T20:24:34.552Z"}' | rpk topic produce key_value --format='%k %v\n'

# make sure you can consume it

rpk topic consume key_value

{

"topic": "key_value",

"key": "key",

"value": "{\"user_id\":2324,\"event_type\":\"BUTTON_CLICK\",\"ts\":\"2024-11-25T20:23:59.380Z\"}",

"timestamp": 1747906361903,

"partition": 0,

"offset": 0

}

Setting Up Tinybird Locally

curl https://tinybird.co | sh

tb login

tb local start

Redpanda and Tinybird both run locally in Docker containers, in order to connect both you can do this next:

# connect the redpanda container to tinybird local

docker network connect tb-local redpanda-0

# if needed modify /etc/hosts in your host and tinybird-local docker container

docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' redpanda-0

192.168.107.5

# in your host /etc/hosts

127.0.0.1 redpanda

# in tinybird-local /etc/hosts

docker exec -it tinybird-local /bin/bash

192.168.107.5 redpanda redpanda-0

Connecting Tinybird to Redpanda and Iceberg

Create a Kafka data source in Tinybird using this connection:

# connections/redpanda.connection

TYPE kafka

KAFKA_BOOTSTRAP_SERVERS {{ tb_secret("KAFKA_SERVER", "redpanda:9092") }}

KAFKA_SECURITY_PROTOCOL PLAINTEXT

KAFKA_SASL_MECHANISM PLAIN

KAFKA_KEY {{ tb_secret("KAFKA_KEY", "") }}

KAFKA_SECRET {{ tb_secret("KAFKA_SECRET", "") }}

And this schema:

# datasources/redpanda_events.datasource

SCHEMA >

`user_id` Int32 `json:$.user_id`,

`timestamp` DateTime64 `json:$.ts`,

`event_type` String `json:$.event_type`

KAFKA_CONNECTION_NAME redpanda

KAFKA_TOPIC value_schema_id_prefix

KAFKA_GROUP_ID topic_0_1747832144

KAFKA_STORE_RAW_VALUE 'False'

Deploy locally and check it's working:

# deploy locally

tb deploy --check

tb deploy

# produce a message to the Redpanda topic

echo 'key {"user_id":2324,"event_type":"BUTTON_CLICK","ts":"2024-11-25T20:23:59.380Z"}\n{"user_id":3333,"event_type":"SCROLL","ts":"2024-11-25T20:24:14.774Z"}\n{"user_id":7272,"event_type":"BUTTON_CLICK","ts":"2024-11-25T20:24:34.552Z"}' | rpk topic produce key_value --format='%k %v\n'

# check the data gets to the Tinybird kafka data source

tb sql "select * from redpanda_events"

# Running against Tinybird Local

──────────────────────────────────

user_id: 2324

timestamp: 2024-11-25 20:23:59.380

event_type: BUTTON_CLICK

__value:

__topic: key_value

__partition: 0

__offset: 1

__timestamp: 2025-05-22 09:37:19

__key: key

──────────────────────────────────

Then, check that you can access the Iceberg table in S3:

tb sql "SELECT * FROM iceberg('s3://redpanda-iceberg/redpanda/key_value', '<your_aws_key>','<your_aws_secret>')"

Troubleshoot issues by querying tinybird.kafka_ops_log or look for quarantine errors in redpanda_events_quarantine (usually related to schema mismatches).

Backfilling Historical Data from Iceberg

As explained in the previous post, you can use copy pipes for backfilling Iceberg tables into Tinybird. Here's how:

Create a copy pipe (for backfilling historical data):

# copies/backfill_events.pipe

NODE backfill

SQL >

%

SELECT *

FROM iceberg(

's3://redpanda-iceberg/redpanda/key_value',

{{ tb_secret('AWS_KEY') }},

{{ tb_secret('AWS_SECRET') }}

)

WHERE ts between {{DateTime(from_date)}} and {{DateTime(to_date)}}

TYPE copy

TARGET_DATASOURCE redpanda_events

Backfill with this command:

tb copy run backfill_events --param from_date='2020-01-01 00:00:00' --param to_date='2025-05-14 00:00:00' --wait

Syncing Real-Time Data from Redpanda

Now you can levarage materialized views and Tinybird API endpoints like this:

# materializations/event_metrics.pipe

NODE process_events

SQL >

SELECT

toStartOfMinute(timestamp) AS minute,

event_type,

countState() AS event_count

FROM redpanda_events

GROUP BY minute, event_type

TYPE materialized

DATASOURCE event_metrics_mv

...and create an API endpoint using the materialized view or querying the raw streaming events.

# endpoints/event_stats.pipe

NODE event_stats_node

SQL >

%

SELECT

minute,

event_type,

countMerge(event_count) count

FROM event_metrics_mv

WHERE minute >= {{DateTime(start_time)}} AND minute <= {{DateTime(end_time)}}

GROUP BY event_type, minute

ORDER BY count DESC

TYPE endpoint

Deploying Real-Time Analytics APIs to Production

To deploy the Tinybird project to production, create the required secrets, deploy to the cloud, and run the backfill in production:

# create as many secrets as needed

tb --cloud secret set <your_secret_name> <your_secret>

tb --cloud deploy

tb --cloud copy run backfill_events --param from_date='2020-01-01 00:00:00' --param to_date='2025-05-14 00:00:00' --wait

Here's more info on how to enable Iceberg support in Redpanda BYOC.

Conclusion

With this approach, you can build real-time analytical applications that leverage both historical data in Iceberg and streaming datafrom Kafka with minimal infrastructure overhead and maximum developer productivity.

Check out this GitHub project for a complete Kafka + Iceberg + Tinybird integration.