One of the critical challenges in event-driven architectures is efficient message routing. Kafka headers are key-value pairs that can be attached to Kafka messages to provide metadata and additional information about the message, helping solve this problem.

Imagine a scenario where you need to route messages to specific services, applications, or tenants based on custom criteria. By storing routing information in headers, such as target service identifiers, you gain the ability to implement sophisticated routing and filtering mechanisms on the consumer side. Kafka headers enable seamless message distribution and streamline your event-driven workflows.

Tinybird supports Kafka headers as a part of its native Kafka Connector. Tinybird users can parse and analyze the contents of Kafka headers to build message routing workflows and real-time analytics with the metadata they contain.

Read on to learn more about what Kafka headers are, the use cases that Kafka headers enable, and how to build analytics over Kafka headers (and other Kafka stream data) using Tinybird.

What are Kafka message headers?

Message headers are a key feature of Apache Kafka that allows you to attach metadata to individual records (or messages) in the form of key-value pairs.

Kafka headers are analogous to HTTP headers, providing additional metadata about the request being transferred, such as content type, compression details, and rate limit status. This Kafka header metadata is separate from the actual message key and value, which typically represent the main content of the message.

What is the format of Kafka message headers?

Kafka headers are key-value pairs, where the header key is a java.lang.String type and the header value is a byte array.

How do you create Kafka headers?

Using Java, Kafka headers are added to an instance the ProducerRecord() class using the headers().add(key, value) function. The key should be a String type while the value should be serialized into a byte array. For example:

How do you read Kafka headers?

To read Kafka headers from a ConsumerRecord() instance using Java, you can iterate over the the headers(), for example:

Note that Tinybird’s Kafka connector automatically handles this, no Java required. Tinybird will deserialize Kafka headers and store them in a __headers column in the resulting Data Source. More on that below.

What are Kafka headers used for?

Custom headers in Apache Kafka add context or metadata to your streaming data from Kafka, and are helpful for routing, versioning, filtering, and other identification requirements. Here are some common use cases for message headers in Apache Kafka:

- Message routing: Headers can be used to store routing information, such as the target service, application, or tenant. This enables more sophisticated routing and filtering mechanisms on the consumer side, based on the header information.

- Message versioning: Storing the version of a message schema in a header can help manage schema evolution and backward compatibility. Consumers can use the version information to handle different message versions accordingly.

- Correlation and tracing: Headers can store correlation IDs and trace information for distributed tracing systems, helping track the flow of messages across multiple services and applications. These metadata can help diagnose and troubleshoot issues in distributed systems.

- User or client identifier: Storing a user or client identifier as a custom header can help track which user or system generated a particular message. This information can be useful for analytics, auditing, and troubleshooting purposes.

- Content type: Indicating the type or format of the message value (e.g., JSON, Avro, XML) allows consumers to understand how to deserialize and process the message correctly.

- Priority or importance level: Attaching a priority level to messages as a custom header can be beneficial for systems that need to process messages based on their urgency or importance. Consumers can use this information to prioritize or filter messages during consumption.

- Compression: If a message payload is compressed, the compression algorithm (e.g., Gzip, Snappy, LZ4) can be specified in a header to inform consumers about the decompression method required.

In addition to the use cases shared above, you may have the need to add encrypted metadata or support feature flags and experiment IDs to aid your development process. If you have a system that handles multi-lingual or region-specific data, your customer header could specify a locale or language to help on the consumer side of your stream.

As you can see, there are many great uses for Kafka message headers, and utilizing them can improve the flexibility, traceability, and interoperability of your event-driven systems.

Limitations of Kafka headers

Note that while Kafka headers are completely customizable, you should be mindful of the trade-offs of incorporating them. Adding headers increases the size of your Kafka messages, and that will impact the storage and network overhead in your Kafka cluster.

It's crucial to strike a balance between enriching messages with useful metadata and maintaining optimal performance and resource usage. Deciding which headers you use will depend on the unique requirements of your application.

An example of using Kafka headers effectively

Let’s say you have a contact entity in your Kafka streams, and that entity can be described with created, updated, and deleted events (common for change data capture use cases). It's common practice to use the same Kafka topic for all the event types that apply to the same entity so that Kafka can guarantee the correct order of delivery and ensure the appropriate status for the contact.

In this case, you could use a custom Kafka header to indicate the type of event and help you analyze the data downstream. If you include the type of event (e.g. created, updated, or deleted) in the header, you could easily count the number of active contacts simply by subtracting the total number of deleted events from the total number of created events. Having this data available in the headers makes it much easier to identify these event types with minimal effort.

Using Kafka headers for real-time analytics

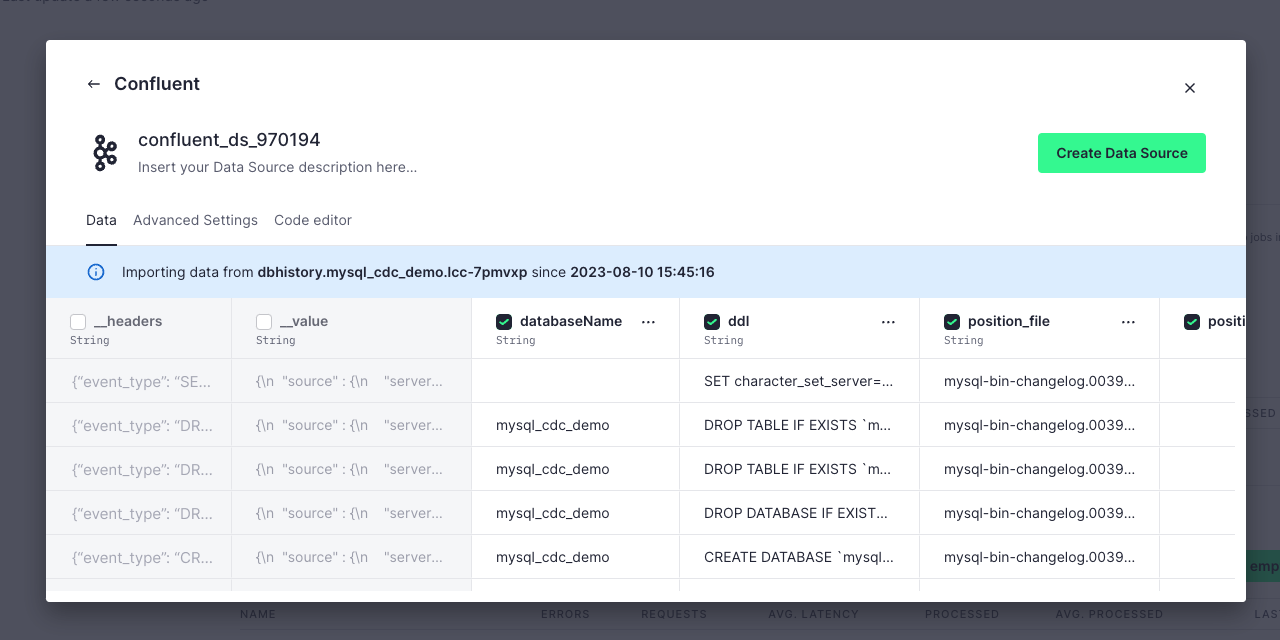

Kafka headers are a powerful way to enhance streams with useful metadata. Since Kafka headers have so many use cases, Tinybird supports them in all of our Kafka-based Connectors (Apache Kafka, Confluent, and (soon) Redpanda).

When you connect a Kafka producer to Tinybird, Tinybird consumes the optional metadata columns __topic, __partition, __offset, __timestamp, and __key from that Kafka record and writes them to a Data Source. Tinybird also includes an optional __value column that contains the entire unparsed contents of the Kafka message. These metadata columns already provide valuable information that can be used to enhance your analysis downstream.

To further enhance Kafka Data Sources, Tinybird also includes a __headers column parsed from the incoming topic messages. When you set up a Kafka Data Source in Tinybird, you’ll see this optional column when you configure your Data Source schema.

If you choose to enable the __header column in your Data Source config, your Kafka headers will be written to that column with a simple key-value JSON structure. Here is an example header that might be written to the __header column in an example banking_transactions Data Source:

You can then use the JSONExtract() function to access individual key-value header entries in your SQL nodes:

From there, you can publish any queries you’ve built using Kafka headers as API endpoints. This can be especially useful when you want to map query parameters in your APIs to Kafka header metadata. For example, using the example above, you could augment your SQL to dynamically return the sum total of banking transactions based on header metadata supplied through a query parameter (assuming your Kafka message included an amount key:

If you’re interested in building analytics APIs over Kafka streams, check out the tutorial below:

Conclusion

Kafka headers are a powerful way to enhance Kafka streams with useful metadata, providing greater flexibility, traceability, and interoperability in event-driven architectures.

Custom headers are used to tailor messages to specific application requirements, such as user or client identification, message priority, message expiration, encryption or signing information, locale or language, and feature flags or experiment IDs.

With the support for Kafka headers in Tinybird, you can access them as a __header column in your Tinybird Data Sources and extract individual key-value pairs in your SQL queries to enhance your published analytics APIs.

If you’re new to Tinybird, give it a try. You can start for free on our Build Plan - which is more than enough for small projects and has no time limit - and use the Kafka Connector to quickly ingest data from your Kafka topics.

For more information about Tinybird and its integration with Kafka, use these resources: