Cloud infrastructure usage is growing for everyone, regardless of industry or company type. However, the cost of cloud services also continues to rise. We’re all looking for ways to cut back on spending this year without sacrificing performance or reliability. So, while we’re all being asked to cut costs in 2023, none of us have been offered a commensurate reduction in expectations. We still need to deliver more features, more value, and more revenue, even in the face of hiring freezes, fewer resources, and tightened budgets.

In short, we need to do more with less.

In this blog post, I offer seven practical strategies that we are using at Tinybird that you can also use to reduce your company’s cloud infrastructure spending this year.

These are seven practical strategies we're using at TInybird to reduce our cloud infrastructure costs in 2023.

The 7 cost-reducing strategies are:

- Take inventory of what you have. You can’t reduce costs unless you know exactly what your costs are and where they originate.

- Identify your commodity tech and see if there are better, more cost-effective alternatives.

- Forecast future usage so that you can optimize pricing through committed or reserved instances.

- Stop misusing cloud services for purposes other than their original intent. Instead, move workloads onto purpose-built services to avoid excess usage.

- Optimize SQL queries and other code to pare down the cost of compute on your data warehouse.

- Consider managed infrastructure that bundles numerous services and can replace the need to operate & integrate many disparate services..

- Choose better, more modern tools and replace decade-old products that haven’t innovated on features, developer experience, or cost savings.

Tinybird is a serverless real-time data platform. We enable developers to ingest massive amounts of data at scale, query and shape that data using SQL, and publish those queries as high-concurrency, low-latency APIs to be consumed by their applications. Reliability, scalability, and a pain-free developer experience are the pillars upon which our services are based. In bringing Tinybird to market, we employ each of these strategies to reduce costs and maintain an exceptional standard of service that enables businesses of all sizes to trust us with their data.

1. Inventory what you have

The first step in any cost-saving effort is to understand what you're currently paying for. This means taking an inventory of all your cloud services and analyzing their usage. You may discover that you're paying for services you don't need, or that you're overpaying for services that you're not fully utilizing.

Take an inventory of what you're paying for. Check for over-provisioned or unused instances, and map out all your cloud infrastructure dependencies.

Let's say that you're running an eCommerce website that relies heavily on cloud infrastructure to handle your traffic and transactions. To take inventory of your cloud components, you might start by looking at your AWS account to see what services you're currently using.

You might find that you're using services like Amazon EC2 instances to host your website, Amazon RDS to manage your databases, and Amazon S3 to store your media files. However, upon further analysis, you might discover that you're not fully utilizing these services. For example, you might have multiple EC2 instances running even though your website only receives a small amount of traffic, or you might have a large RDS instance even though your database is relatively small.

To further refine your analysis, you might want to use monitoring tools like CloudWatch to track the usage and performance of your services over time. This can help you identify which services are being underutilized and which are driving up your costs.

Another important aspect of taking inventory is understanding the dependencies between your cloud components. For example, you might find that your website relies on a number of other services like Lambda functions, API Gateway, or CloudFront. By mapping out these dependencies, you can identify which services are critical to your website's performance and which can be optimized or eliminated to reduce costs.

Taking inventory of your cloud components and services is a critical first step in identifying opportunities for infrastructure cost savings. By analyzing your usage, monitoring performance, and understanding dependencies, you can identify which services are driving up your costs and which can be optimized to achieve more with less. This not only helps you reduce your cloud spending, but it can also improve your overall cloud performance and reliability.

At Tinybird, we follow this mantra. Tinybird is a real-time data platform, and we export our detailed metrics from Google Cloud Platform, Amazon Web Services, and Microsoft Azure directly into our data warehouses. We then use native Connectors (like our BigQuery Connector) to sync that data into Tinybird, where we build real-time dashboards (like the one below) and alerts that enable us to inventory our costs as they are incurred and keep us from being “foolish” (in the words of our CTO).

Generally speaking, we always start with smaller instances and grow both compute and storage as we scale. But having a real-time dashboard enables us to be very responsive to our customers’ needs and our own business requirements. In addition, we can also use the APIs that we publish in Tinybird to adjust our cloud infrastructure usage programmatically.

2. Identify commodity tech

When you look at your full inventory of cloud services, identify which components are commodity tech. Commodity tech refers to services that are widely available across multiple cloud providers and are not unique to any one vendor. These components may include things like storage, compute, or networking services. By identifying commodity tech, you may uncover opportunities to switch vendors simply based on price.

Commodity tech like object storage, databases, data warehouses, and CDNs can potentially be moved to lower cost alternatives.

For example, here are some ways you could potentially save money by moving off of commodity components in Amazon Web Services to other, potentially lower-cost alternatives.

3. Forecast future usage to optimize & negotiate

Predicting future cloud consumption is crucial for managing and reducing cloud spend. By leveraging historical data and identifying usage drivers, you can make informed decisions to take advantage of cost-reducing measures such as reserved instances and upfront commitments.

Regularly monitoring and adjusting your predictions will help you maintain an optimized and cost-effective cloud infrastructure.

- Analyze historical usage data: Gather and analyze historical data on your cloud usage to identify trends and patterns. This data provides valuable insights into the seasonality, growth rate, and potential fluctuations in your organization's cloud consumption.

- Identify usage drivers: Determine the primary drivers of your cloud consumption, such as product launches, marketing campaigns, or infrastructure upgrades. This will help you forecast demand for cloud services based on your business activities.

- Monitor and adjust: Continuously monitor your cloud consumption and compare it against your predictions. Adjust your forecasts as necessary to account for changes in business needs, industry trends, or technological advancements.

Measure your current usage and assess your future needs to predict your annual usage. Use those predictions to get commitment discounts or reserved instances from your cloud providers. Update and adjust as needed.

How you analyze this data is up to you; often, you can capture usage metrics from the cloud platform and send this data to your existing Cloud Data Warehouse. From there, you can analyze it for trends, and enrich it with analysis of other internal datasets.

At Tinybird, we capture all of our cloud usage data and load it into (you guessed it) Tinybird. The Tinybird Events API makes it trivial to connect the various cloud services and send usage logs to Tinybird, analyze the data in SQL, and then integrate the resulting metrics into charting tools like Grafana using, for example, the Tinybird Grafana Plugin.

With a clear understanding of your needs, you can unlock several levers that you can employ as part of a wider cost reduction strategy:

- Reserved Instances: By predicting your future cloud usage, you can strategically purchase reserved instances for long-term commitments. These instances typically offer significant discounts compared to on-demand pricing. For example, AWS, Azure, and Google Cloud all provide reserved instance options with varying terms and payment options. A reserved instance of AWS EC2 can get you discounts of up to 72%.

- Upfront Commitments: Leverage your usage predictions to negotiate upfront commitments with cloud providers. These agreements guarantee a minimum level of usage over a specific time period in exchange for reduced rates. Make sure to consider the flexibility of the agreement, as business needs may change, requiring adjustments to your cloud consumption. It is common to combine an upfront commitment with optional on-demand usage if your usage goes above the agreed commitment.

- Rightsizing and optimization: Accurate cloud usage predictions enable you to rightsize your infrastructure, ensuring you are using the appropriate resources for your workloads. This can lead to cost savings by preventing over-provisioning and underutilization of cloud resources.

- Scaling strategies: Predicting future cloud consumption allows you to plan and implement effective scaling strategies, such as auto-scaling or scheduled scaling, based on your expected demand. This can help optimize costs by ensuring you have the necessary resources available when needed while avoiding unnecessary expenses during periods of low demand.

It’s worth noting that, over the past decade, the industry has trended towards “horizontal” scaling, which offered immense flexibility but incurred higher costs. Recently, there has been a movement toward a more balanced approach: Vertical scaling can be used as a cost-effective way to scale up to meet consistent growth, while horizontal scaling can be used to react to sudden, unpredictable, and temporary increases in demand.

Tinybird is a cloud-based platform, and behind the scenes, we rely on many services from cloud providers like AWS and GCP. We use these same strategies to optimize our own costs; we forecast our future usage and use this to negotiate upfront commitments with cloud providers. We can then pass these savings on to our customers by offering a packaged service that is more cost-efficient than the aggregate unit cost of the cloud services.

4. Identify misuse and move workloads to the right tech

Businesses commonly misuse cloud services or use them for purposes they weren't intended for. By identifying misuse and moving workloads to the right tech, you can reduce your cloud spending without sacrificing performance.

Smaller companies (and even larger ones) often misuse tools they already have because they'd prefer not to spin up new tools. Using tools in the wrong way is expensive. Choose fit-for-purpose alternatives that are easy to start with and scale.

Here are four examples of commonly misused technologies that can end up costing you a lot of money:

- ElasticSearch: ElasticSearch is a powerful search engine that enables businesses to quickly and easily search large volumes of data. However, ElasticSearch is often misused as a database, leading to inefficient consumption of cloud resources and increased costs. Instead of using ElasticSearch as an analytical database, you should consider using a purpose-built OLAP database like ClickHouse® or Pinot for structured data querying.

- AWS Lambda: AWS Lambda is a serverless compute service that enables you to run code in response to events or triggers. However, AWS Lambda is often misused for long-running or CPU-intensive tasks, leading to inefficient consumption of cloud resources and increased costs. Instead of using AWS Lambda for these tasks, you should consider using a more appropriate compute service like EC2 or ECS.

- AWS RDS: AWS RDS is a fully-managed database service that enables you to easily set up, operate, and scale databases in the cloud. However, AWS RDS is often misused for data warehousing, leading to inefficient consumption of cloud resources and increased costs. Instead of using AWS RDS for data warehousing, you should consider using a purpose-built data warehousing service like BigQuery or Snowflake.

- Data Warehouses: Data Warehouses, such as Snowflake or BigQuery, are designed to handle large volumes of data and complex queries for analytical purposes. However, they are often misused as real-time databases for user-facing applications, leading to inefficient consumption of cloud resources and increased costs. Instead of using a data warehouse as a real-time database, you should consider using a service like Tinybird to sync data warehouse data to a fast database and build real-time APIs for user-facing applications.

Allow me to use Tinybird as an example of what I mean by using the right tech for the job.

Today, very few acceptable solutions exist to allow user-facing applications to directly access the data in your data warehouse. In many cases, Tinybird’s clients and prospects have tried to build web services on top of data warehouses like Snowflake, which they subsequently attempt to query through HTTP requests.

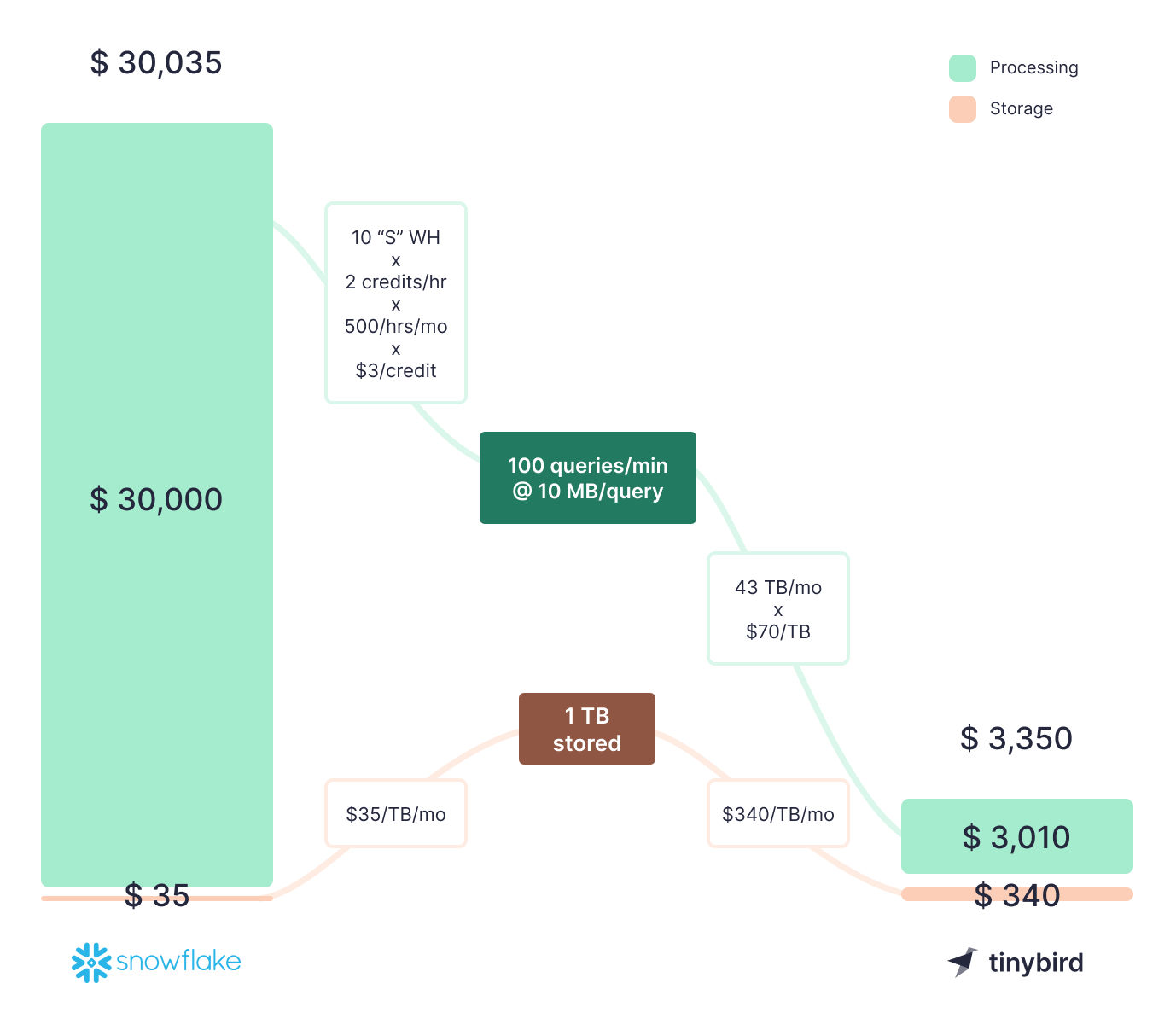

This approach is limited by the concurrency Snowflake allows (limited connections per warehouse depending on cluster size), so many customers end up "throwing money at it,” provisioning quite a lot of data warehouses to make this work. And even then, it's barely usable.

With Tinybird, you can regularly sync data from a data warehouse, query and shape it using SQL, and publish it as high-concurrency, low-latency APIs. Tinybird performs the scheduled sync jobs in the background, allowing you to build fast user-facing applications while reducing data warehouse costs.

Applied to a real-world example, Tinybird can potentially reduce costs for this type of workload by up to 10x.

5. Write better SQL queries for data warehouses

Data warehouses are typically usage-based, meaning the more data you transfer or process, the more you'll pay. However, many business intelligence (BI) tools (and the analysts who wield them) are inefficient at writing queries, which can lead to unnecessary spending. By writing better SQL queries for your data warehouses, you can optimize your data processing and reduce your cloud spending.

The majority of your data warehouse spending comes from queries run against data. Increasing query efficiency by 10% across the board could reduce your overall data warehousing costs by the same amount.

Let's say you have a data warehouse that contains sales data for your business, and you're using a BI tool to generate reports for your sales team. The BI tool generates a report that shows the total sales for each product category, but it's slow and costly to run, especially as your data grows over time.

This SQL query is straightforward, but it requires scanning the entire sales_data table to calculate the total sales for each product category. As the size of your data warehouse grows, this query will become increasingly slow and costly to run.

To make the query more efficient, consider a filter, as in the query below.

In this revised query, I've added a WHERE clause that filters the sales_data table to only include sales that occurred after January 1st, 2022. This reduces the amount of data that needs to be scanned to generate the report, resulting in faster and more efficient processing. Additionally, by reducing the amount of data being processed, this query can also reduce your overall data warehouse costs, as you're only paying for the data that you're actually using.

Follow tried-and-true rules to optimize queries: Filter early and by indexed columns, only select columns you need, JOIN and aggregate last.

Of course, this is just one example, and the specific optimizations you can make will depend on your data and your use case. However, by taking the time to write more efficient SQL queries for your data warehouse, you can achieve significant cost savings while also improving performance and productivity.

At Tinybird, we help our customers put this into practice by visualizing consumed data for every node of SQL they write, offering in-product tooltips for optimization, and making available Service Data Sources that can be analyzed at no cost to monitor query performance.

6. Consider managed infrastructure

Managing your own cloud infrastructure can be time-consuming and costly. While it’s true that some managed services can have a higher unit price, they often end up reducing overall costs, as they require fewer people dedicated to simply maintaining infrastructure. Choosing fully-managed infrastructure allows you to dedicate more resources to delivering work that provides real business value. You’ll often see managed services called ‘database-as-a-service’ (DBaaS) or infrastructure-as-a-service (IaaS).

Additionally, many modern managed services, such as Tinybird, go beyond simple DBaaS/IaaS and combine multiple services into a single managed service. For example, in Tinybird we’ve combined the features of an ETL (extract-transform-load) tool, OLAP (Online Analytical Processing) database, and API development framework into a single managed service. This offers a significant cost advantage, as there is no need to separately procure, integrate, staff, and scale three distinct products or frameworks.

Bundled managed infrastructure provides economies of scale. Managed infrastructure distributes services compute across shared resources. They spend more than a single company and can more easily project future usage, so they are often the best at securing steep discounts from cloud vendors, which they (hopefully) pass on to their customers. When choosing managed analytics platforms, compare actual costs across real-world scenarios - see our detailed Tinybird vs ClickHouse® Cloud cost comparison for practical examples.

Managed services that bundle many services together into one product can leverage economies of scale on your behalf, meaning that their unit price for the underlying cloud services is cheaper, and they offer attractive prices to compete with the cloud vendors.

Not only can the service cost often be cheaper, but you avoid the so-called 'integration tax' (i.e., you don't have to put any effort into configuring/integrating the services together) as the service does it all for you. It’s easy to underestimate the cost of people spending hours gluing different services together and maintaining that glue over time.

At Tinybird, we do the hard work of building real-time data ingestion tools for ClickHouse®, managing ClickHouse® at scale, providing robust and constantly improving support for querying your data, and helping you easily expose your data as high-concurrency, low-latency APIs to be consumed within your products or services. To do the same work as Tinybird within your company would cost you several FTEs of time and considerable Cloud resources, which could probably be better used to offer differentiated value.

“Tinybird is a force multiplier. It unlocks so many possibilities without having to hire anyone or do much additional work. It’s faster than anything we could do on our own.” - Senior Data Engineer at a Global Sports Betting Company

7. Choose better, more modern tools

Finally, consider choosing better, more modern tools that can significantly increase productivity. By using the right tools, you can free up your headcount to do more things and achieve more with less.

Earlier, I covered some examples using best-of-breed services instead of commodity AWS equivalents. In recent years, several new services have become available that replace the need to build entire components of your cloud infrastructure yourself. Here are just a few examples:

- Tinybird: I’m biased, but I think Tinybird is awesome. As mentioned earlier, Tinybird is a real-time data pipeline platform designed to transform and analyze data in a simple, cost-effective way. With Tinybird, you can ingest data at scale, query and shape it using SQL, and publish it as high-concurrency, low-latency APIs. Instead of building and maintaining a custom data pipeline infrastructure, you can use Tinybird to handle data transformations, aggregation, and analysis, reducing the need for expensive data warehousing or ETL processes. Building and maintaining a custom data pipeline infrastructure may require a team of 3-4 data engineers, costing approximately $750,000 per year. In comparison, a Tinybird Pro subscription could cost as little as $50 per month (or less).

- Vercel: Vercel is a cloud platform for building, deploying, and scaling websites and applications. With Vercel, you can easily build and deploy serverless applications using a variety of programming languages, frameworks, and databases. Instead of building and maintaining a custom infrastructure for a web application, you can use Vercel to handle scaling, security, and deployment, freeing up resources to focus on core competencies. Building and maintaining a custom infrastructure for a typical medium-sized web application may require a team of 4-5 developers, designers, and DevOps engineers, costing approximately $1 million per year. Why not let Vercel do that work for you at a fraction of the cost?

- Prisma: Prisma is a next-generation ORM (Object-Relational Mapping) that enables businesses to easily build and scale modern database applications. With Prisma, you can quickly and easily connect to databases, generate API code, and deploy to production, reducing the need for complex infrastructure or database management. Building and maintaining a custom ORM infrastructure is a lot of plumbing work for a team that likely has bigger, more pressing priorities now.

- Tigris: Tigris is a modern data platform that combines a NoSQL database and Search index into a single, unified tool. Many data teams today are achieving similar functionality by combining tools like Postgres, DynamoDB, and ElasticSearch, tools built in 1996, 2012, and 2010 respectively. While still powerful, these tools can suffer from architectural decisions made over a decade ago that negatively impact their efficiency, and leave it to the user to handle integrating them. Like-for-like workloads can be up to 4x cheaper on Tigris than DynamoDB. Tigris also seeks to drastically improve the Developer Experience, providing carefully considered APIs and SDKs around the platform so that developers can focus on building valuable applications with fewer people, in less time.

- Estuary Flow: Many data teams are building change data capture (CDC) pipelines using a combination of Debezium, Kafka, and K-Connect. If you’ve built this, you’ll know it’s no cakewalk. The compute cost of running this entire pipeline in Estuary can be cheaper than the cost of simply running a Debezium server, let alone the Kafka compute costs and considerable human effort required to build and maintain it. Estuary avoids the need to run and manage multiple services, while also enabling additional use cases that weren’t possible before, such as performing aggregations in the pipeline to save on downstream processing and storage costs. Compared to tools like Fivetran or Airbyte, Estuary can cost up to 80% less for compute, while also delivering fresher, real-time data.

- Streamkap: Streamkap also seeks to challenge the complexity of integrations between Debezium and Kafka as well as the cost of tools like Airbyte and Fivetran. While Airbyte and Fivetran can enable 1-click data pipelines, they are notorious for being highly unoptimized, resulting in surprisingly huge bills for their users. Monthly Active Row pricing models on tools like Fivetran guarantee that, when users begin to scale, they will rapidly out-pace the pricing structure and must migrate pipelines elsewhere. Streamkap aims to not only reduce costs but enable real-time streaming data movement that its batch counterparts simply cannot achieve.

Legacy tech might seem safe, but that perceived safety comes at a premium. Consider smaller, newer tool vendors that do a better job for less.

The combination of compute- and people-efficiency offered by modern tools can provide significant cost savings. These tools have built upon the lessons learned over the past decade, throwing away outdated models and building services that are highly optimized for today's workloads.

Under the hood, they use cutting-edge technology that is able to perform the same work, in less time, with fewer resources than their legacy alternatives. At the same time, each tool has built beautiful developer experiences that allow one individual to achieve what used to take multiple teams.

Looking for ways to offload processes that are becoming increasingly commoditized helps free your team’s resources to focus on business differentiation.

Summary

Reducing cloud infrastructure spending is an ongoing challenge for businesses. By taking an inventory of your current setup, identifying commodity tech, optimizing your data processing, and choosing the right tools, you can significantly reduce your cloud spending without sacrificing performance or reliability. With the right strategies and tools in place, businesses can achieve more with less and thrive in the ever-evolving cloud landscape of 2023.

And, if you’re not yet a Tinybird customer, you can sign up for free (no credit card required) and get started today. Also, feel free to join the Tinybird Community on Slack and ask us any questions (about Tinybird or anything in this blog post) or request any additional features.