Tinybird Agent Skills¶

We've open-sourced Tinybird Agent Skills, a collection of packaged instructions that enable AI coding agents to work with Tinybird projects.

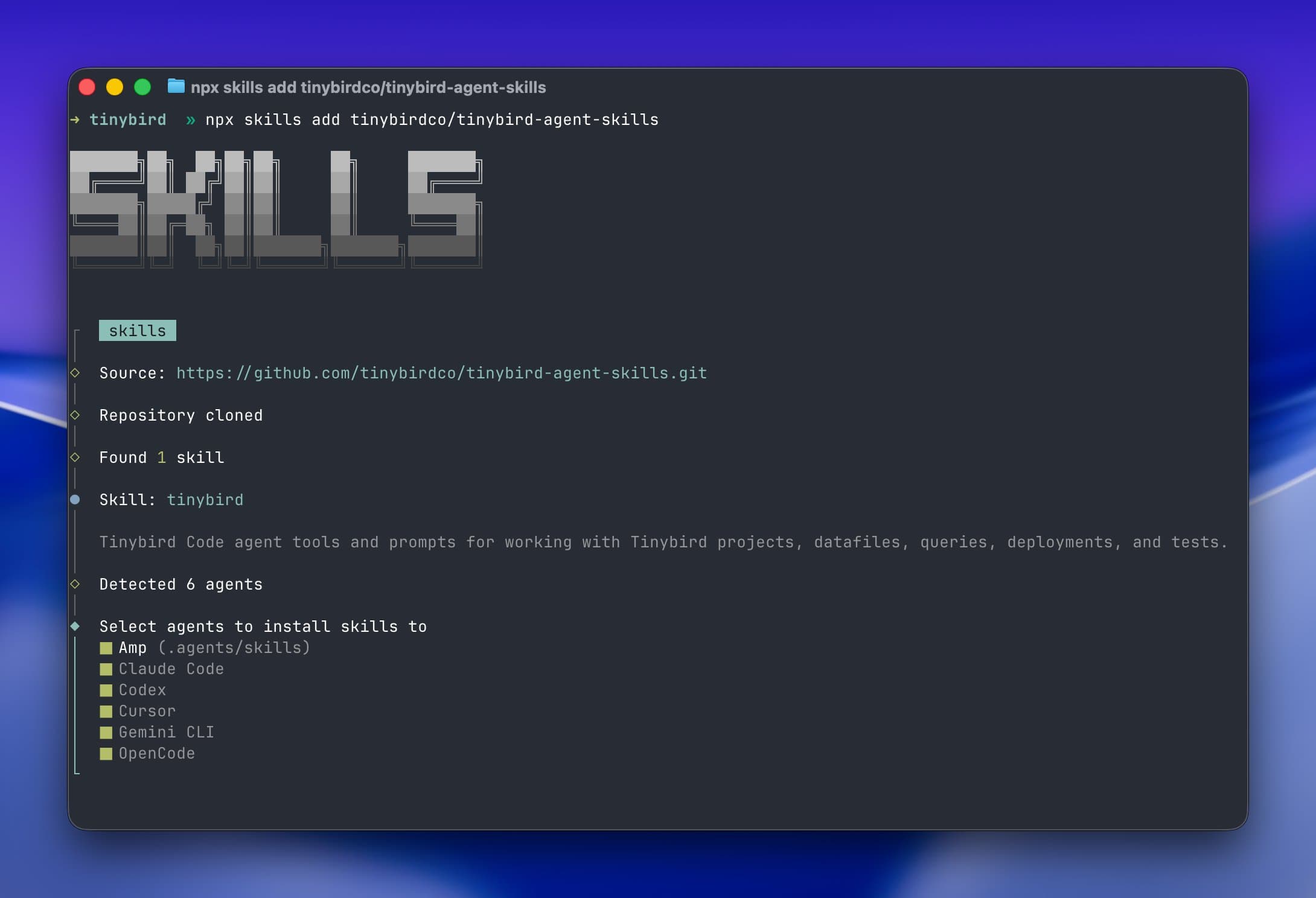

Skills follow the Agent Skills open standard and work with Claude Code, Cursor, Codex, Amp, Gemini CLI, OpenCode and many others. The tinybird-best-practices skill includes rule files covering Data Sources, Pipes, Endpoints, SQL optimization, deployments, and testing. Everything your coding agent needs to build real-time analytics on Tinybird.

Install with:

npx skills add tinybirdco/tinybird-agent-skills

This is the same knowledge that powers Tinybird Code. Now available for all agents.

Cancel queries in Time Series (Classic)¶

You can now cancel running queries in the Time Series visualization. When you navigate away or start a new query, the previous request is canceled instead of continuing in the background.

Bug fixes and improvements¶

- [All]: The Workspace name now correctly appears in the shared-with tooltip, even when that Workspace isn't in your accessible list.

- [All]: Fixed an issue where TTL validation would incorrectly flag valid configurations.

- [All]: The SQL query size limit has been increased to 128KB (up from 8KB), and validation is now consistent across all API paths.

- [All]: Fixed

CREATE VIEWgeneration for DataHub lineage compatibility. - [Classic]: Improved error messages for Copy Pipe timeout errors.

Breaking changes¶

- The Tinybird CLI v3.0.0 now requires Python 3.10 or higher. Python 3.9 is no longer supported. Upgrade Python before updating to tinybird 3.0.0.

- Support for Join Tables has been removed. We made JOINs 100x faster in ClickHouse, so Join Tables no longer provide a benefit. Migrate to standard MergeTree tables with regular JOIN queries.

- The deprecated CDK-based Snowflake and BigQuery connectors have been removed. Use S3 or GCS connectors instead.

From the blog¶

How we built a production Kafka connector for ClickHouse: A deep dive into how we built our Kafka connector that processes billions of events daily. Covers architecture decisions, scaling with rendezvous hashing, circuit breaker patterns, and why Python works for high-throughput pipelines.